About

I’m an AI4SE researcher building trustworthy, efficient, and sustainable software using AI.

I currently work as a Research Associate in AI for Software Engineering at King’s College London, contributing to the ITEA4 GENIUS project—a multinational collaboration leveraging GenAI and LLMs to enhance software development life cycle. I am a member of the Software Systems (SSY) group in the Department of Informatics, supervised by Dr Jie M. Zhang, Dr Gunel Jahangirova, and Prof Mohammad Reza Mousavi. My work focuses on developing quality assurance methods for LLM-based software engineering, ensuring the functionality, quality, and architectural soundness of both human and AI-generated software systems.

Previously, from June 2024 to November 2025, I worked as a KTP Associate with both the University of Leeds and TurinTech AI, focusing on compiler- and LLM-based code optimisation. We successfully completed the two-year KTP plan in just one and a half years. At the University of Leeds, I was a member of the Intelligent Systems Software Lab (ISSL) and the Distributed Systems and Services (DSS) research group, supervised by Prof Jie Xu and Prof Zheng Wang. At TurinTech AI, I was a member of the Data Science team led by Dr Fan Wu and Dr Paul Brookes.

I completed my PhD in Dec 2024 in the Department of Computer Science at Loughborough University, supervised by Dr Tao Chen in the IDEAS Laboratory (Intelligent Dependability Engineering for Adaptive Software Laboratory). My PhD thesis received the SPEC Kaivalya Dixit Distinguished Dissertation Award 2024, a prominent award in computer benchmarking, performance evaluation, and experimental system analysis.

Research Interests

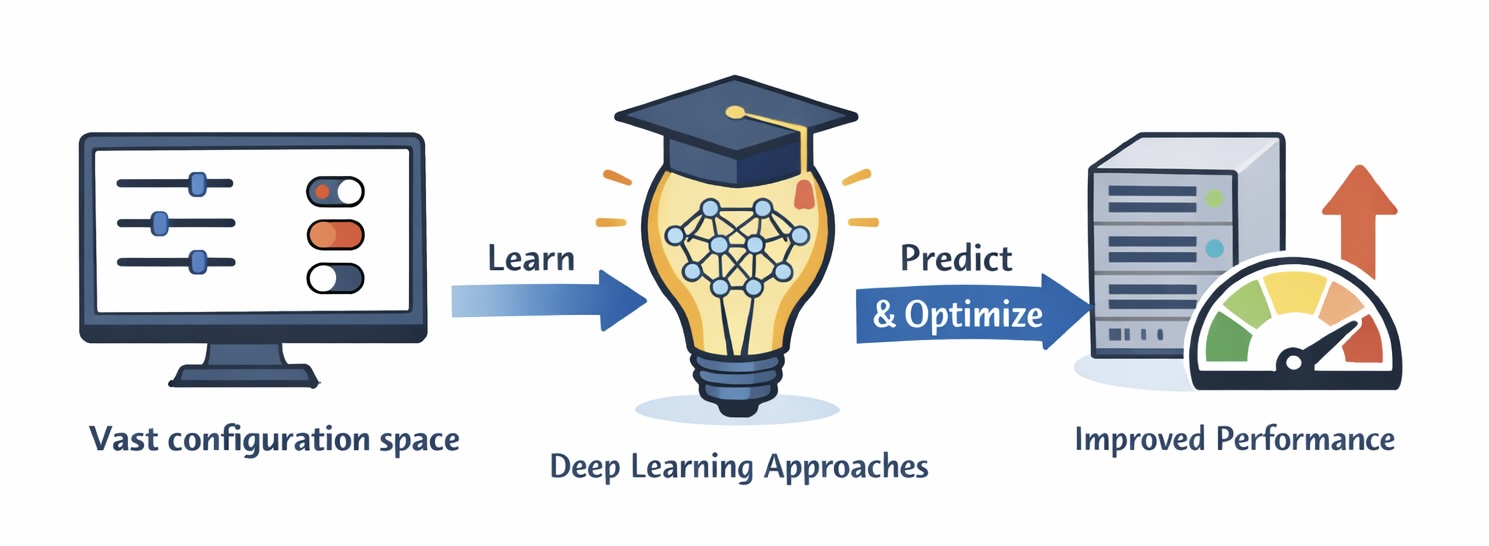

Software Configuration Performance Engineering

Build data-driven ML/DL approaches that learn high-dimensional configuration spaces to predict and optimise performance without exhaustive benchmarking, while tackling challenges such as feature sparsity, rugged performance spaces, and cross-environment drift (versions, hardware, workloads).

Why it matters: Enables earlier performance issue detection, software adaptability and autoscaling, and faster product evolution with far fewer measurements.

Trustworthy AI-assisted Software Development

Develop quality assurance and optimisation methods for LLM-based software engineering, focusing on how we evaluate, compare, and improve AI-assisted coding workflows under realistic constraints (correctness, robustness, cost, sustainability), using SBSE-style strategies to orchestrate LLMs and make results more reliable in practice.

Why it matters: Transforms unverified, ad-hoc LLM-assisted coding into a reproducible engineering process, reducing computing resources and carbon footprint.

GenAI for Code Performance Optimisation

Apply search-based multi-LLM optimisation and meta-prompting for robust code scoring and optimisation, combined with ensembling and compiler techniques; these methods are implemented in commercial platforms via TurinTech AI and evaluated on real production workloads.

Why it matters: Delivers verifiable speedups and cost reductions on production codebases while making GenAI systems more reliable and auditable in practice.

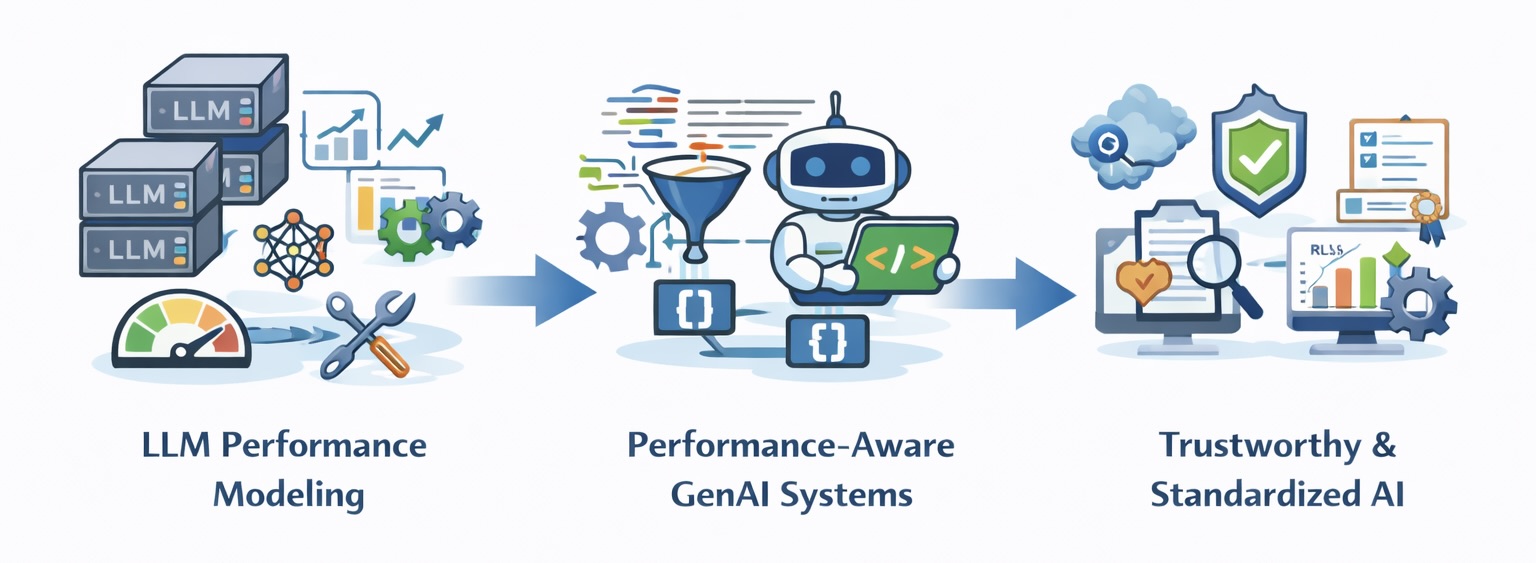

General AI4SE & SE4AI

Explore broader AI4SE and SE4AI directions including LLM performance modeling (hybrid models + online adaptive tuning), performance-aware GenAI systems (dynamic prompt engineering + configuration tuning), trustworthy GenAI (RLHF + uncertainty verification), and industry standards and tooling (benchmarks, profiling, static analysis, CI/CD integration).

Why it matters: Makes GenAI systems predictable and safe in real-world workloads, enabling reproducible evaluation, faster industrial adoption, and lower compute and carbon footprints.

If you’re interested in collaboration, please feel free to reach out!

News

- Feb 2026ServiceServed as Program Committee Member for ISSTA 2026.

- Jan 2026Paper'Analyzing Message-Code Inconsistency in AI Coding Agent-Authored Pull Requests' and 'Comparing AI Coding Agents: A Task-Stratified Analysis of Pull Request Acceptance' accepted at MSR 2026 Mining Challenge track.

- Jan 2026ServiceServed as Program Committee Member for ICST 2026.

- Nov 2025AwardReceived the Distinguished Reviewer award at ICSE 2025 Shadow PC.

- Oct 2025ServiceServed as Junior Program Committee Member for MSR 2026.

- Oct 2025Paper'GA4GC: Greener Agent for Greener Code via Multi-Objective Configuration Optimization' accepted at SSBSE 2025 as a challenge track paper.

- Sep 2025ServiceServed as Program Committee Member for WWW 2026.

- Sep 2025Paper'Tuning LLM-based Code Optimization via Meta-Prompting: An Industrial Perspective' accepted at ASE 2025 as an industry showcase paper (acceptance rate 44%, 61/139).

- Jul 2025ServiceServed as Shadow Program Committee Member for ICSE 2026.

- Jun 2025Paper'Dually Hierarchical Drift Adaptation for Online Configuration Performance Learning' accepted at ICSE 2026 in the first round (acceptance rate 9.29%, 60/646).

- Jun 2025Paper'Learning Software Bug Reports: A Systematic Literature Review' accepted at TOSEM as a journal paper.

- Jan 2025AwardAwarded the SPEC Kaivalya Dixit Distinguished Dissertation Award 2024, a prominent award in computer benchmarking, performance evaluation, and experimental system analysis.

Further Background

I received first-class BSc degree from both the Information and Computing Science programme at Xi’an Jiaotong-Liverpool University (2014-16), and the Computer Science course at University of Liverpool (2016-18).